Converting Binary to Text: Unveiling the Transformation Process

In the vast landscape of digital communication, binary code serves as the fundamental language that computers understand. It consists of only two symbols, 0 and 1, representing the binary digits or bits. While computers communicate effortlessly in binary, humans typically use text-based languages for communication. This prompts the need for converting binary to text, a process that unveils the transformation from machine-readable to human-readable. In this article, we will explore the intricacies of this conversion process, the significance it holds in the digital realm, and the methods employed to bridge the gap between the binary and textual worlds.

Understanding Binary Code:

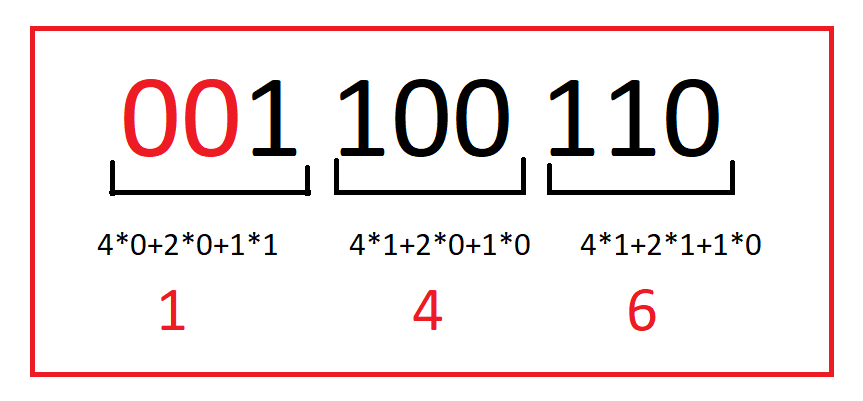

Before delving into the conversion process, it's essential to grasp the basics of binary code. In a binary system, each digit, or bit, can only have one of two values: 0 or 1. These binary digits are combined in groups to represent more complex information. For example, eight bits make up a byte, and a sequence of bytes can represent characters, numbers, or other data types.

In the binary system, every piece of information is expressed as a combination of these two digits. However, for humans accustomed to letters, numbers, and symbols, understanding and working with binary directly can be challenging. This is where the conversion from binary to text becomes indispensable.

The Need for Binary-to-Text Conversion:

While computers communicate seamlessly in binary, the human interface relies heavily on text- used languages. Text is the medium through which we convey information, and it is the foundation of most communication between humans and computers. Converting binary to text is, therefore, a crucial step in making the information stored and processed by computers accessible and meaningful to humans.

Several scenarios necessitate the conversion of binary to text:

1. Data Transmission:

During data transmission, information is often encoded in binary for efficiency. However, when humans need to interpret or analyze this data, it must be converted back into a readable text format.

2. File Formats:

Some file formats store data in binary for compactness and efficiency. For example, images, videos, and executable files are often represented in binary. To understand and modify the content of these files, converting binary data to text becomes essential.

3. Cryptography:

In the realm of cryptography, binary is frequently used to represent encrypted messages. Decrypting these messages involves converting the binary representation back into human-readable text.

4. ASCII and Unicode:

In the realm of text encoding, two widely used standards are ASCII (American Standard Code for Information Interchange) and Unicode. ASCII, developed in the early days of computing, uses a 7-bit binary code to represent characters. However, as technology evolved and the need for a more extensive character set arose, Unicode emerged.

Unicode utilizes a variable-length encoding system, allowing it to represent a vast array of characters

from different languages and symbol sets. The most common Unicode encoding is UTF-8, which can

represent every character in the Unicode character set.

Binary-to-Text Conversion Methods:

1. ASCII Encoding:

The ASCII encoding system provides a direct mapping between characters and their binary

representations. In ASCII, each character is assigned a unique 7-bit binary code. However, modern

systems often use 8 bits (1 byte) to represent ASCII characters.

The process of converting binary to text using ASCII involves breaking the binary code into 8-bit chunks,

each representing a specific character. The ASCII table serves as the key to mapping these binary codes

to their corresponding characters.

Let's take an example

Binary: 01001000 01100101 01101100 01101100 01101111 00100000 01010111 01101111 01110010

01101100 01100100

Breaking this binary sequence into 8-bit chunks:

rust

01001000 -> 'H'

01100101 -> 'e'

01101100 -> 'l'

01101100 -> 'l'

01101111 -> 'o'

00100000 -> (Space)

01010111 -> 'W'

01101111 -> 'o'

01110010 -> 'r'

01101100 -> 'l'

01100100 -> 'd'

So, the binary sequence '01001000 01100101 01101100 01101100 01101111 00100000 01010111 01101111 01110010 01101100 01100100' is converted to the text 'Hello World'

2. Unicode (UTF-8) Encoding:

The Unicode encoding, specifically UTF-8, is more versatile than ASCII and can represent characters from various languages and symbol sets. UTF-8 uses variable-length encoding, with one to four bytes per character

The conversion process involves breaking the binary sequence into groups of bits, determining the Unicode code points, and then mapping those code points to characters using the UTF-8 encoding rules.

Let's take an example:

Binary: 01001000 01101001 11000011 10101111 01110010 01100101

Breaking this binary sequence into 8-bit chunks:

rust

01001000 -> 'H'

01101001 -> 'i'

11000011 10101111 -> Unicode Code Point U+00AF (¯)

01110010 -> 'r'

01100101 -> 'e'

So, the binary sequence '01001000 01101001 11000011 10101111 01110010 01100101' is converted to the text 'Hi¯re'.

Online Tools for Binary-to-Text Conversion:

As technology advances, online tools provide a quick and efficient way to perform binary-to-text conversion. These tools are user-friendly and often support multiple encoding standards, making them versatile for various applications.

Here's a brief overview of how these tools work:

1 Input Binary Data:

Users can input the binary data they want to convert into a text box provided by the online tool.

2 Select Encoding Standard:

Depending on the binary data's origin, users can select the appropriate encoding standard, such as ASCII or UTF-8.

3 Click Convert:

With the binary data and encoding standard specified, users can click a "Convert" button, and the tool will generate the corresponding text output.

4 Copy or Download Result:

The converted text is then displayed on the tool's interface, and users can either copy it for further use or download it as a file.

Conclusion:

The transformation from binary to text is a fundamental process that bridges the gap between the digital language of computers and the human-readable world of text. Understanding the intricacies of binary code, text encoding standards like ASCII and Unicode, and the methods employed for conversion is crucial in various fields, including data transmission, file formats, and cryptography.

Whether you're a programmer deciphering binary-encoded data or an enthusiast exploring the foundations of digital communication, the ability to convert binary to text opens up a new dimension of understanding in the digital realm. Embracing the convenience of online tools further simplifies this process, making it accessible to a broader audience and facilitating efficient communication between humans and computers in the ever-evolving landscape of technology.